Sketch-based understanding is a key process in visual perception. Sketching is also a natural and primitive means of communication; it is how our ancestors transmitted ideas, stories, and activities.

Given the critical role sketching plays in visual perception, it is natural to incorporate this process into the field of computer vision. Indeed, we have seen an increasing interest in this topic by the computer vision community, addressing a diversity of sketch-based tasks like sketch classification, sketch representation learning, sketch-guided object localization, sketch-based image retrieval, sketch-to-photo translation, among others. All the sketch-based understanding tasks have gained interest with time, which can be attributed to the development of deep neural networks capable of extracting semantic representations from images, and the ever growing access to smartphones that can be used as a drawing tablet.

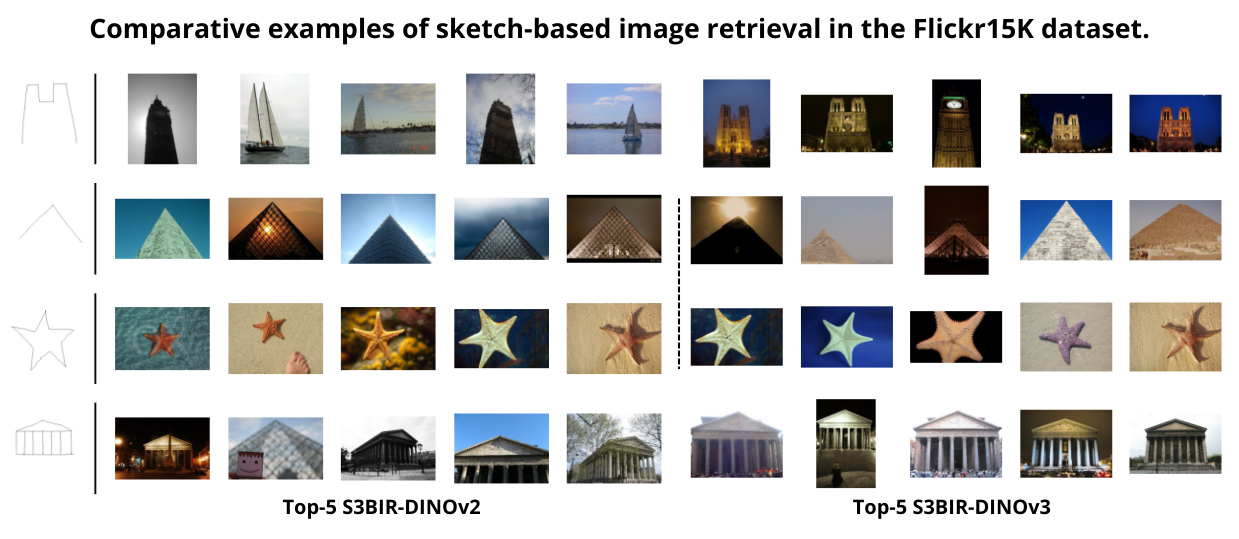

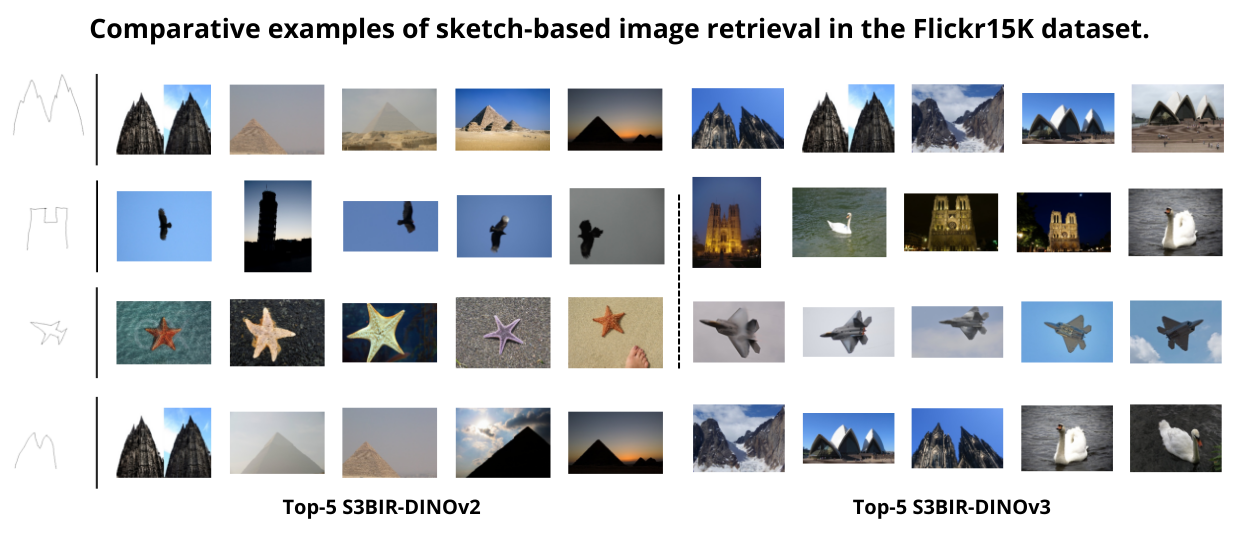

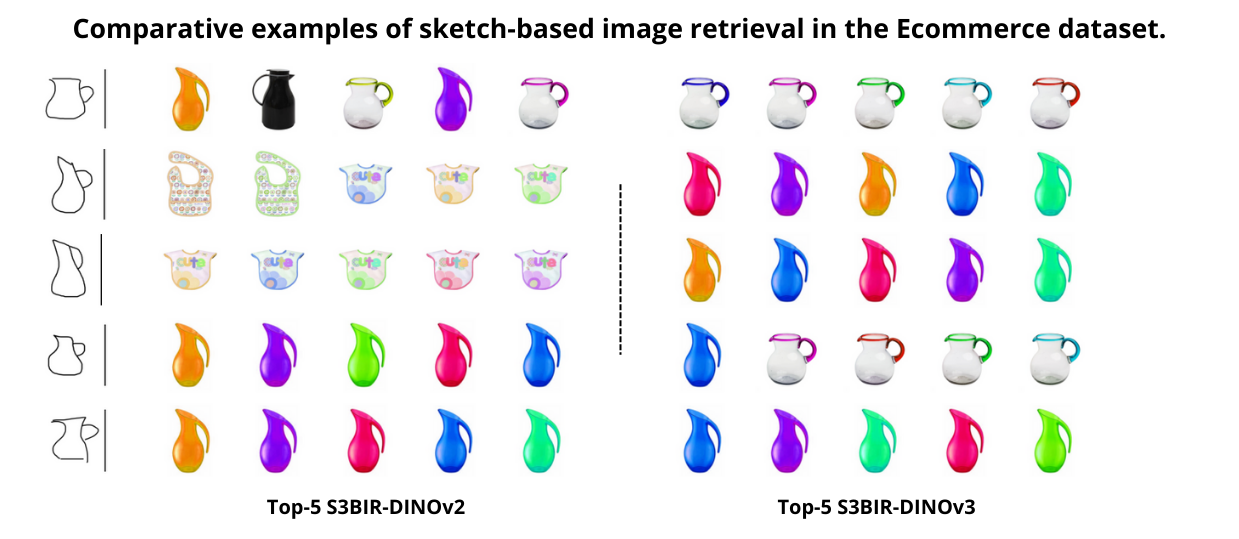

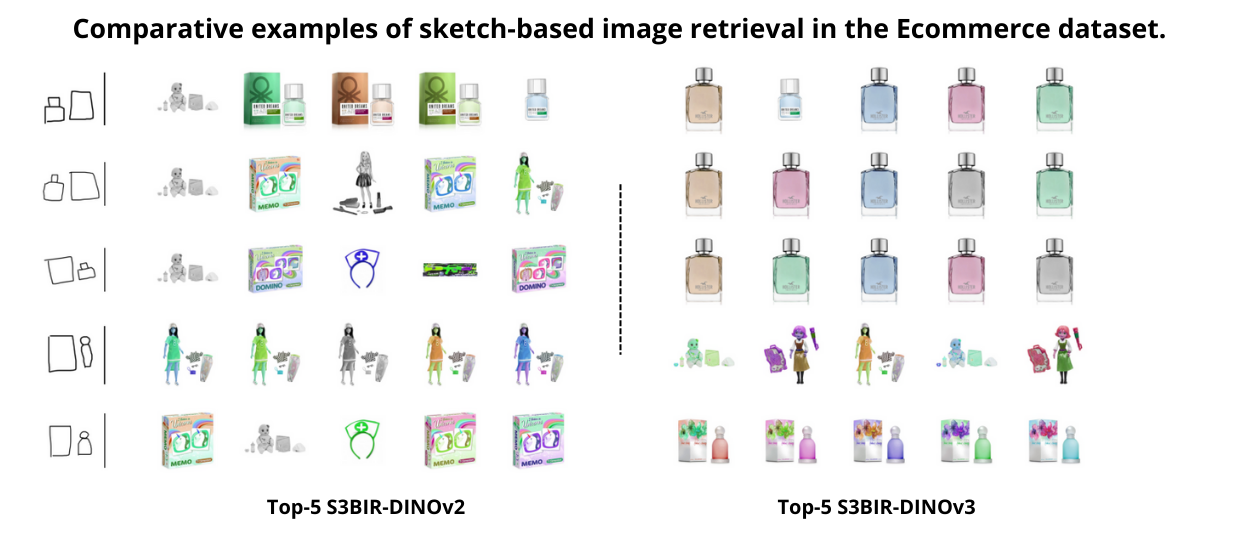

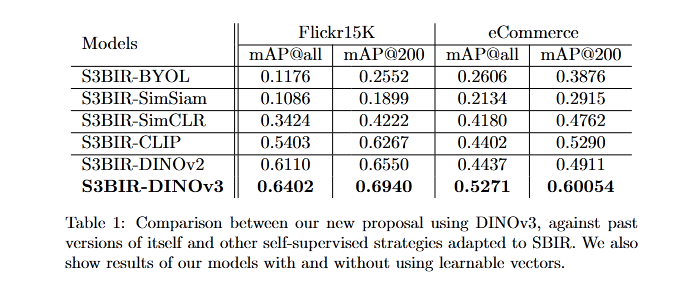

To evaluate the effectiveness of our approach, we present both quantitative and qualitative results. The table below summarizes the performance of various self-supervised learning strategies adapted to sketch-based image retrieval (SBIR) on two benchmark datasets: Flickr15K and eCommerce. Our proposed model, S3BIR-DINOv3, consistently outperforms previous methods across all metrics, demonstrating significant improvements in retrieval accuracy.

Additionally, the comparative visual examples illustrate the quality of the retrieved images given a sketch query. We compare the top-5 results obtained with S3BIR-DINOv2 and S3BIR-DINOv3 on the Flickr15K and eCommerce dataset, highlighting the superior semantic alignment and precision achieved by our new model.